Opened 18 years ago

Last modified 2 years ago

#6148 new New feature

Add generic support for database schemas

| Reported by: | Erin Kelly | Owned by: | |

|---|---|---|---|

| Component: | Database layer (models, ORM) | Version: | dev |

| Severity: | Normal | Keywords: | oracle postgresql mysql schemas |

| Cc: | erik.engbrecht@…, gabor@…, jaensch@…, Matt Boersma, bruth@…, hcarvalhoalves@…, Joey Wilhelm, Oldřich Jedlička, iacobs+django@…, grf@…, faheem@…, lameiro@…, shaun_stanworth@…, hr.bjarni+django@…, hector@…, sven@…, bas@…, dvanliere@…, Andrii Kurinnyi, martin.paquette@…, gonzalemario@…, ckarrie@…, Brillgen Developers, mgventura, bituderr, dcassidy36@…, qbikk@…, dcwatson@…, ash@…, npeihl, mike@…, tgecho, mmitar@…, anssi.kaariainen@…, andrep, pmountifield@…, mprittie, davidhalter88@…, net147, esauro@…, thisgenericname@…, s3v, gioviboy@…, tomek@…, w2lkm2n@…, dpwrussell@…, manelclos@…, ghislain.leveque@…, autodidacticon, bnafta@…, JorisBenschop, david.fischer.ch@…, axel.rau@…, trbs@…, zerks0@…, Adam Brenecki, Carlos Palol, František Svoboda, Ryan Hiebert, curtis@…, Adrien Delessert, Petr Přikryl, Ülgen Sarıkavak | Triage Stage: | Accepted |

| Has patch: | yes | Needs documentation: | no |

| Needs tests: | no | Patch needs improvement: | yes |

| Easy pickings: | no | UI/UX: | no |

Description

There is frequently a need for Django to access data from tables in other schemas; this is especially true when building Django apps on top of legacy databases. Currently, the proposed solution is to add support for the set search_path command (#1051) via custom initialization sql (#6064). This is a good solution for PostgreSQL users, but it will not solve the problem in Oracle, which has no analogue of set search_path.

Solving the problem in a generic way will require adding a per-app or per-model db_schema option, in order to issue SQL queries using the syntax "schema_name"."table_name". This may also require more advanced introspection (at least in Oracle) to determine whether the table already exists when running management commands.

A current workaround in Oracle is to manually create private synonyms inside the Django schema, referencing the necessary tables from other schemas. However, the management commands do not currently recognize the existence of synonyms via introspection. Additionally, the synonym solution may not be sufficiently generic in the long term.

Attachments (17)

Change History (207)

comment:1 by , 18 years ago

| Triage Stage: | Unreviewed → Accepted |

|---|

by , 18 years ago

| Attachment: | generic-db_schema-r8308.diff added |

|---|

comment:2 by , 18 years ago

| Keywords: | mysql added |

|---|

I added support for using db_schema = 'whatever' in models.

It works for mysql at least but that's the only one I know about.

by , 18 years ago

| Attachment: | generic-db_schema-r8319.diff added |

|---|

remade some stuff and added testing and doc. Tested with mysql and sqlite so far

comment:4 by , 18 years ago

| Owner: | changed from to |

|---|---|

| Status: | new → assigned |

comment:5 by , 18 years ago

| Owner: | changed from to |

|---|---|

| Status: | assigned → new |

by , 18 years ago

| Attachment: | generic-db_schema-r8463.diff added |

|---|

Still some issues when testing, not the test themself

comment:6 by , 18 years ago

| Has patch: | set |

|---|---|

| Patch needs improvement: | set |

| Status: | new → assigned |

There are still some issues with testing. See discussion at http://groups.google.com/group/django-developers/t/bc0cd2e15c741e0a

by , 18 years ago

| Attachment: | generic-db_schema-r8696.diff added |

|---|

Works with postgres and mysql but testing only works on postgres due to mysqls schema implementation.

follow-up: 16 comment:7 by , 17 years ago

| Owner: | removed |

|---|---|

| Status: | assigned → new |

| Triage Stage: | Accepted → Unreviewed |

I don't have time to fix this right now... Better someone else does it.

comment:9 by , 17 years ago

| Cc: | added |

|---|

comment:10 by , 17 years ago

| Cc: | added |

|---|

comment:11 by , 17 years ago

| Cc: | added |

|---|

comment:12 by , 17 years ago

| Cc: | added |

|---|

comment:13 by , 17 years ago

| Cc: | added |

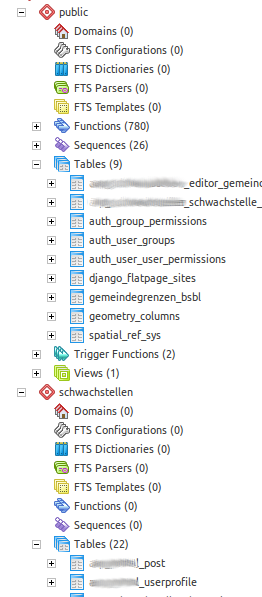

|---|

comment:14 by , 17 years ago

| Cc: | added |

|---|

comment:15 by , 17 years ago

| Cc: | added |

|---|

follow-up: 17 comment:16 by , 17 years ago

Replying to crippledcanary:

I don't have time to fix this right now... Better someone else does it.

Fixing this should change all the logic at django.db.backends.create_test_db, as for MySQL schemas are not really schemas, but databases - therefore, there should be not one, but several test_* databases.

I'm interested in fixing this so schema support can be checked in (really needed on PostgreSQL), but want feedback from MySQL users.

So, are MySQL databases really equivalents of schemas? If databases are like schemas, it would need to patch model's db_schemas to test_schemaname while testing. I don't like the idea of monkeypatching names for tests, as custom SQL will fail with different "schema" (not really) names. Isn't cleaner to fallback to less features for MySQL (as we do for SQLite)?

comment:17 by , 17 years ago

| Cc: | added |

|---|

Replying to anonymous:

So, are MySQL databases really equivalents of schemas? If databases are like schemas, it would need to patch model's db_schemas to

test_schemanamewhile testing. I don't like the idea of monkeypatching names for tests, as custom SQL will fail with different "schema" (not really) names. Isn't cleaner to fallback to less features for MySQL (as we do for SQLite)?

Just few notes from a random reader:

- I had a look at MySQL manual and the

CREATE SCHEMAcommand is synonym toCREATE DATABASE(see 12.1.6. CREATE DATABASE Syntax). The database name is rather flexible and can contain any character exceptNUL(\0) - the name is coded in a special way for the file system (see 8.2.3. Mapping of Identifiers to File Names).

- The patch contains

DROP SCHEMAIF EXISTSextension for PostgreSQL as well, but it has been supported since the 8.2 version. Maybe it could be noted somewhere.

comment:18 by , 17 years ago

- Also the

SHOW SCHEMAScommand is a synonym toSHOW DATABASES(see 12.5.5.11. SHOW DATABASES Syntax).

comment:19 by , 17 years ago

| Cc: | added |

|---|

comment:20 by , 17 years ago

I would suggest to drop the MySQL support, because when you drop the database, you normally (PostgreSQL) drop also the schemas together. But because MySQL doesn't support real schemas, you cannot say which "schema" belongs to which "database" - so you don't know what to drop. Maybe some Django dev can decide (I'm not the one) :-)

comment:21 by , 17 years ago

I think it's an error to forbid PostgreSQL users access to the feature because MySQL implementation doesn't match. I'm in favor of dropping MySQL support to get this ready for commit.

comment:22 by , 17 years ago

I've just looked at the patch a little bit more and I see problem with inconsistently using the schema in table names - there is a problem with syncing:

What is the full list of existing tables? Should it be with the schema in the name or without? The problem can be seen in the syncdb.py part that adds

+ # Add model defined schema tables if anny + if model._meta.db_schema: + tables += connection.introspection.schema_table_names(model._meta.db_schema)

This adds all tables from the specified schema to the known list of tables (but without the schema name). Those tables could be unrelated to the DB search path! This is compared to

db_table(that includes the schema) on lines 68-69

if connection.introspection.table_name_converter(model._meta.db_table) in tables: continue

The same applies to the sql.py part of the patch.

I also miss the possibility to define global DATABASE_SCHEMA.

There is certainly at least one problem in syncing now. Maybe it is possible to fix it easily, but it means to think about it more in depth (to have in mind the user specified schema and the DB search path). Maybe the only fix is to disallow the per model schema definition and to allow only the global one, but as I'm not expert in this area, I might be wrong :-)

by , 17 years ago

| Attachment: | generic-db_schema-r11231.diff added |

|---|

follow-up: 24 comment:23 by , 17 years ago

Since this ticket has been seeing some activity lately, I decided to put some work in on the patch. The old patch worked by fudging the schema into the db_table attribute, which is sort of a hack. I've changed it to combine db_schema and db_table into an entirely new attribute, qualified_name.

I've also fixed up a number of areas that were still passing around raw table names, including I think the case that oldium pointed out. Finally, I've made the necessary changes in the Oracle backend to get this to work.

I'm not too keen on dropping MySQL support just because it's difficult to test. To the end-user, Django is not in the business of creating and dropping schemas, and so it shouldn't matter what a schema actually represents as long as we can reference the tables inside it. Testing is also complicated in Oracle where "schema" is basically synonymous with "user", and I don't have the test fully working yet there either.

comment:24 by , 17 years ago

Replying to ikelly:

Since this ticket has been seeing some activity lately, I decided to put some work in on the patch. The old patch worked by fudging the schema into the db_table attribute, which is sort of a hack. I've changed it to combine db_schema and db_table into an entirely new attribute, qualified_name.

Please have a look at my patch for your patch (applies after you one), I've found some quotation problems, also the index name in CREATE INDEX in PostgreSQL cannot contain schema name, because the index is always created in the same schema as the parent table (see 8.2.13 CREATE INDEX).

by , 17 years ago

| Attachment: | generic-db_schema-update.patch added |

|---|

Incremental patch that fixes CREATE INDEX problems, some quotations and supports new global settings.DATABASE_SCHEMA

comment:25 by , 17 years ago

| Cc: | added |

|---|

comment:26 by , 17 years ago

| Cc: | added |

|---|

comment:27 by , 17 years ago

Hi again all, nice to see that there is some interest.

I started working on this one again and you can keep track of progress at http://github.com/kmpm/django/tree/master[[BR]]

I had some issues applying the patches but that is fixed and added some new stuff as well. I totally agree with the global DATABASE_SCHEMA and the qualified_name attribute that combines them.

The changes I made so far enables syncing again but I want to do some more work before posting another patch. I will eventually turn up as user "kmpm" here as well, to keep usernames in sync :-)

comment:28 by , 17 years ago

| milestone: | → 1.2 |

|---|---|

| Owner: | set to |

| Status: | new → assigned |

comment:30 by , 17 years ago

The correct branch for my work on this is http://github.com/kmpm/django/tree/generic-db_schema

and nothing else

comment:31 by , 17 years ago

| Cc: | added |

|---|

comment:32 by , 17 years ago

Hi, nice to see that this is finally being worked on.

The most elegant solution from my perspective would be if I could specify in my project-wide settings that I want to use schemas, and then I would get a generic schema implementation.

Then the database layer would create a schema for each app, so that instead of:

- auth_group (table)

- auth_user (table)

- auth_message (table)

- etc...

it would instead create it thus:

- auth (schema)

- group (table)

- user (table)

- message (table)

with the names all shorter and nicer.

But it should also allow per-app or per-model schema option to explicitly specify what you want to ease support of legacy databases etc.

Is what I am describing what is being worked on, or are you only making it so that you have to specify for every single model or app that you want it to live in a schema?

-thanks

comment:33 by , 17 years ago

| Cc: | added |

|---|

comment:34 by , 16 years ago

| Cc: | added |

|---|

comment:35 by , 16 years ago

| Cc: | added |

|---|

comment:36 by , 16 years ago

| Cc: | added; removed |

|---|

follow-up: 38 comment:37 by , 16 years ago

Replying to hejsan:

Is what I am describing what is being worked on, or are you only making it so that you have to specify for every single model or app that you want it to live in a schema?

-thanks

The main purpose of this for me is to have the site living in the different namespace, but in the same database (PostgreSQL); not to have the models/apps in dedicated namespaces. In this way you can run completely different sites (users, models...) within the same database - useful if you do not have full control over the database (hosting for example).

comment:38 by , 16 years ago

Replying to oldium:

The main purpose of this for me is to have the site living in the different namespace, but in the same database (PostgreSQL); not to have the models/apps in dedicated namespaces. In this way you can run completely different sites (users, models...) within the same database - useful if you do not have full control over the database (hosting for example).

That kind of support would still not benefit the majority of those that crave schema support, i.e. those that are trying to make django work with a legacy postgresql database, and those that are used to having tables sorted nicely into schemas.

Developers that are used to postgresql use schemas to keep their db tidy and managable, that is the foremost reason to use schemas. I don't see many usecases for what you are implementing over having each site in it's own database, although I'm sure there are some.

Would it be hard to implement my suggestion into your changes?

i.e. allowing each app to override the site-wide schema, and possibly each model also?

By the way, I don't mean to come of harsh, I'm really grateful for your work

follow-up: 40 comment:39 by , 16 years ago

The app specific schema might for example be specified in the init.py file in the apps root folder, or in a settings.py file in that folder, or just use the apps name. That would solve 99% of use cases IMO.

Of course we would need a setting USE_SCHEMAS to allow reuse of applications in non schema environments and to allow for an empty site-wide schema name.

follow-up: 41 comment:40 by , 16 years ago

Replying to hejsan:

The app specific schema might for example be specified in the init.py file in the apps root folder, or in a settings.py file in that folder, or just use the apps name. That would solve 99% of use cases IMO.

This is something for application-specific settings; I don't know if this is supported in Django currently. Anyway, each model in your application can have the schema specified explicitly, it could be also some application constant - like this:

APP_SCHEMA = "myschema"

class MyModel(models.Model):

...

class Meta:

db_schema = APP_SCHEMA

comment:41 by , 16 years ago

Replying to oldium:

class Meta:

db_schema = APP_SCHEMA

Is this something you have already implemented?

Can you provide a patch that would work with the current django development version? Or do you sync your github branch to the development version often?

This would certainly get things done, although it would be much more convenient to be able to have an app specific setting. Or have a global settings variable USE_APP_NAMES_AS_SCHEMA_NAMES = True. This would allow to put the standard django apps and 3rd party apps also into schemas.

follow-up: 43 comment:42 by , 16 years ago

Replying to hejsan:

Replying to oldium:

class Meta:

db_schema = APP_SCHEMA

Is this something you have already implemented?

The db_schema is already implemented in attached patches. There is no magic included, you just define a variable APP_SCHEMA (or any other name) at the top of the file and use it later on.

Can you provide a patch that would work with the current django development version? Or do you sync your github branch to the development version often?

I don't have anything at the moment, maybe kmpm (alias crippledcanary) has more.

follow-up: 45 comment:43 by , 16 years ago

Replying to oldium:

The db_schema is already implemented in attached patches. There is no magic included, you just define a variable APP_SCHEMA (or any other name) at the top of the file and use it later on.

Ok, great. Should I apply all the attached patches in order or just the last one?

What do you think about the USE_APP_NAMES_AS_SCHEMA_NAMES idea? Is it doable?

comment:44 by , 16 years ago

sorry for not keeping this one up to date.

The only thing supported for now is db_schema setting per model.

I really would like some of the db related tickets to be solved first. Don't have the numbers now but there are some multi-db and GSOC ones that change more and related stuff that we realy shoud wait for. Thats at least my +1.

And if anyone want's to pick this ticket up, please do.

comment:45 by , 16 years ago

Replying to hejsan:

Replying to oldium:

The db_schema is already implemented in attached patches. There is no magic included, you just define a variable APP_SCHEMA (or any other name) at the top of the file and use it later on.

Ok, great. Should I apply all the attached patches in order or just the last one?

You need the last two patches - generic-db_schema-r11231.diff and generic-db_schema-update.patch.

What do you think about the USE_APP_NAMES_AS_SCHEMA_NAMES idea? Is it doable?

I don't know about new features mentioned by kmpm, I'm currently busy with other things (non-Django). You are free to dive deeper into it :-)

comment:46 by , 16 years ago

For anyone looking for a quick work-around, MariusBoo noted that you can define your table like this:

db_table = '"django"."company"'

This will fool the quote function to think that your table is properly quoted. This also means that you have to specify the schema for each table manually.

I just verified that this solution works with r10837 and r11802 and PostgreSQL 8.3.8.

follow-up: 51 comment:47 by , 16 years ago

That would only work with read operation. When you trying to write to the db, it would failed because the Django assume the sequence as "schema.tablename"_column_id_seq, so you have to escape it as 'schema\".\"tablename'.

Specifying schema for each table is not a problem for me - explicit and no magic, just make it 'official' and documented.

http://stackoverflow.com/questions/1160598/how-to-use-schemas-in-django

comment:48 by , 16 years ago

Here goes...

The attached 'generic-db_schema-r11827.diff' is applicable to a recent trunk but I know there are failing tests with this one.

Especially when it comes to o2o and m2m relations.

I will try to sort them out as time goes but feel free to help. Identifying bugs and writing tests would be great.

I haven't tested the global db_schema settings at all but I will get there. I just wanted to get a patch out that doesnt conflict to much with trunk.

comment:49 by , 16 years ago

As I just found out... all of those m2m and o2o tests fail on a clean trunk as well. So for now the code seems to be in shape.

comment:50 by , 16 years ago

Ok. Fixed a issue with m2m models where the ends of the relation were in different schemas.

I would like to get some help on mysql testing. Test database creation is dangerous because mysql has an alias between 'create database' and 'create schema' so if someone uses the same schema in tests as in dev/production then the table might get recreated, data altered or in best case testing fails.

follow-up: 52 comment:51 by , 16 years ago

Replying to k4ml:

That would only work with read operation. When you trying to write to the db, it would failed because the Django assume the sequence as

"schema.tablename"_column_id_seq, so you have to escape it as'schema\".\"tablename'.

A minor annoyance in the quote trick is that creating indexes with the db_index field attribute doesn't work:

Installing index for myschema.mymodel model

Failed to install index for myschema.mymodel model: syntax error at or near "."

LINE 1: CREATE INDEX "myschema"."mymodel_myfield" ON ...

^

comment:52 by , 16 years ago

Replying to akaihola:

A minor annoyance in the quote trick is that creating indexes with the

db_indexfield attribute doesn't work:

Thanks for finding something valuable to test. And it does work with my patch already but now I test for it.

comment:53 by , 16 years ago

| Cc: | added |

|---|

by , 16 years ago

| Attachment: | 6148-generic-schema-support-r11951.diff added |

|---|

Patch updated to r11951 (just before multi-db merge). Django test suite runs w/o errors with sqlite3 and PostgreSQL.

follow-up: 55 comment:54 by , 16 years ago

Last patch (generic-db_schema-r11871.diff) when applied to the exact revisio it was generatd against, gives the following results:

- sqlite3 (patch should be a noop): 21 failures, 12 errors of 1171 tests

- PostgreSQL (8.3): 21 failures, 13 errors of 1172 tests

I've been working on this and reached the following state:

- Updated the patch to the last revision in Django trunk just before the multi-db landing: r11951.

- Fixed several bugs so the Django test suite runs on both PostgreSQL and sqlite3 without any error introduced by the patch.

See 6148-generic-schema-support-r11951.diff (download it to be able to review it).

I believe the next steps needed would be:

- Test against MySQL and Oracle and fix any problems so they also reach zero errors.

- Add more tests?

- Port to trunk as of now (post multi-db), hopefully we will be able to do that in the feature freeze period after 1.2beta1

IMHO if we do the effort on this we can provide a working patch against 1.2 so testers can play and report back w/o much trouble and then aim for inclusion in Django 1.3.

comment:55 by , 16 years ago

Replying to ramiro:

- Test against MySQL and Oracle and fix any problems so they also reach zero errors.

I've set up a box for running the test suite against MySQL 5.1.37. How would you like to receive results?

by , 16 years ago

| Attachment: | 6148-generic-schema-support-r12426.diff added |

|---|

Patch updated to r12426 (post multi-db merge). Django test suite runs w/o errors with sqlite3 and PostgreSQL.

comment:56 by , 16 years ago

I've updated the patch to a revision after the landing of multi-db.

TODO:

- Test against MySQL and Oracle and fix any problems so they also reach zero errors.

- Definitely add more tests, currently the

schemasmodeltests app is very basic.

by , 16 years ago

| Attachment: | 6148-generic-schema-support-r12426.test-failures.mysql.txt added |

|---|

Django test suite failures which appear with the patch but not without it in r12426

comment:57 by , 16 years ago

I ran the Django test suite both with and without the patch on r12426. On my Debian Lenny box the test suite is awfully slow (takes 3½h) and has several failures even without the patch. The above attachment shows the one failure which exists only when the patch is in use. IIRC this is identical to the results I got earlier with the patch against r11951.

comment:59 by , 16 years ago

| Cc: | added |

|---|

by , 16 years ago

| Attachment: | 6148-r12948.diff added |

|---|

Path updated to r12948, tested with postgresql 8.3 and sqlite3

comment:60 by , 16 years ago

| Owner: | set to |

|---|---|

| Status: | new → assigned |

Patch has been updated to trunk as of now. It still passes the Django test suite under postgreSQL 8.3 (including schema-specific test added by the patch) and sqlite3 (it contains some commented out logging code that will be removed later). Next steps:

- Convert doctests added by the patch to unittest

- Expand the tests

- Review existing schemas/tables introspection code (as it was done with the syncdb code for this trunk merge)

- Try to see what happens with MySQL

Any help with MySQL and Oracle is welcome.

follow-up: 63 comment:61 by , 16 years ago

Ramiro: one nit, your patch contains some stuff specific to you, ie, opening a log file in your home directory.

comment:62 by , 16 years ago

| Cc: | added |

|---|

comment:63 by , 16 years ago

Replying to anonymous:

Ramiro: one nit, your patch contains some stuff specific to you, ie, opening a log file in your home directory.

Yes, but that debugging function isn't being called from anywhere else so it's a no on and no log file is created. I've left that code in the work in progress patch on purpose because it isn't ready for review, particularly I was debugging an interaction I was seeing between the new multiple schema code and #12286 (reopened and fixed since then).

comment:64 by , 16 years ago

The latest patch (6148-r12948.diff) includes code in django/db/models/options.py (~line 106) in the Options.contribute_to_class method. The code looks like:

# Construct qualified table name.

self.qualified_name = connection.ops.prep_db_table(self.db_schema,

self.db_table)

if self.qualified_name == connection.ops.quote_name(self.db_table):

# If unchanged, the backend doesn't support schemas.

self.db_schema = ''

The problem is when this is applied to the latest (post multi-db) code from svn, the connection object refers to the default database. As a result the prep_db_table() and quote_name() methods used may be the wrong ones for this model. A quick fix would be to import connections and router from django.db instead of connection and then to query router for the correct database to use and then finally to get that from the connections dictionary before using it as shown above. Apart from the import line change this should be all that is needed before connection is used:

connection = connections[router.db_for_read(cls)]

However in this discussion on django-developers forum, Russell Magee pointed out that connection should not be used in Options at all. He feels that the unpatched code also has a bug since it uses connection to get the max_name_length and uses it to truncate the db_table member. He opened ticket #13528 for that issue.

Apart from the quick fix I cannot see an easy way to avoid this issue. The qualified_name member could be dropped from Options and the qualified table name could be calculated everywhere it is needed but that would mean a lot of changes to the code to replace all references to qualified_name. Also using qualified_name is quicker since it is calculated once and reused, it would be a pity to lose that. Perhaps qualified_name could be hidden behind a method that lazy-loads the member variable the first time it is used. Unfortunately I am not familiar enough with the database layer design to know if this is possible or even a good idea.

comment:65 by , 16 years ago

Patch 6148-r13366.diff contains:

- Post-r13363 updates.

- Implemented compatibility with multi-db as outlined by Peter Long (plong). I propose we wait for the core dev team to decide the strategy to be used to get connection-specific information out of the model's

_metaOptions instance (#13528, and the Query refactoring GSoC 2010 project). - Converted all tests to unit tests. They still need to be expanded.

Test suite passes for sqlite3 and postgresql 8.3 (modulo the unrelated #13799 failure currently present in trunk).

comment:66 by , 16 years ago

| Cc: | added |

|---|

When installing the patched r13366 I receive the following message:

byte-compiling /usr/local/python/lib/python2.6/site-packages/django/db/backends/oracle/creation.py to creation.pyc

SyntaxError: ('non-default argument follows default argument', ('/usr/local/python/lib/python2.6/site-packages/django/db/backends/oracle/creation.py', 70, None, 'def _create_test_db(self, verbosity=1, autoclobber=False, schema):\n'))

follow-up: 68 comment:67 by , 16 years ago

The current patch (r13366) does not support the inspectdb command (as far as I can tell).

comment:68 by , 16 years ago

comment:69 by , 15 years ago

| Cc: | added |

|---|

comment:70 by , 15 years ago

| Cc: | added |

|---|

comment:71 by , 15 years ago

| Cc: | added |

|---|

comment:72 by , 15 years ago

| Status: | assigned → new |

|---|

comment:73 by , 15 years ago

I saw you've just changed the current status to 'new'. I'm really interested to achieve this feature and become it a core-integrated one. Is there anything I can do to help?

Many thanks.

comment:74 by , 15 years ago

| Owner: | removed |

|---|

comment:75 by , 15 years ago

| Cc: | added |

|---|

I cannot assign a schema to m2m-tables. Tables like

- auth_group_permissions

- django_flatpages_sites

...and so on are still in my public-Schema.

I'm using PostgreSQL 8.4 with PostGIS Extension.

Another problem is the incompatibility with PostGIS: every geometry-related colums isn't added to the table.

comment:76 by , 15 years ago

| Cc: | removed |

|---|

comment:77 by , 15 years ago

| Cc: | added |

|---|

comment:78 by , 15 years ago

| Cc: | added |

|---|

comment:79 by , 15 years ago

| Cc: | added |

|---|

comment:80 by , 15 years ago

| Severity: | → Normal |

|---|---|

| Type: | → New feature |

comment:81 by , 15 years ago

| Cc: | added |

|---|

by , 15 years ago

| Attachment: | 6148-r16443.diff added |

|---|

comment:82 by , 15 years ago

| Cc: | added |

|---|---|

| Easy pickings: | unset |

| UI/UX: | unset |

Attached is the schema patch applied to r16443. Anyone care to try it out and run some tests? I tried to keep the schema changes intact as much as possible; I haven't otherwise done any testing on this.

by , 15 years ago

| Attachment: | 6148-r16443-2.diff added |

|---|

comment:83 by , 15 years ago

| Easy pickings: | set |

|---|---|

| Needs tests: | set |

looks like svn did not pull in all of the trunk changes. updated again and re-ran diff. attached new diff.

comment:84 by , 15 years ago

| Cc: | added |

|---|

comment:85 by , 15 years ago

Pretty nasty bug in this still - if you run tests which are left incomplete, Django will ask 'if you would like to try deleting the test database 'TEST DATABASE - the problem is that the _destroy_test_schemas method is connecting using your normal alias, which will hit your working database.

comment:86 by , 14 years ago

| Cc: | added |

|---|

comment:87 by , 14 years ago

| Cc: | added |

|---|

comment:88 by , 14 years ago

| Cc: | added |

|---|

comment:89 by , 14 years ago

| Cc: | added |

|---|

comment:90 by , 14 years ago

| Cc: | added |

|---|

comment:91 by , 14 years ago

Just a note, in case it's needed. The analogue of set search_path in Oracle is:

alter session set current_schema = SCHEMA

comment:92 by , 14 years ago

| Cc: | added |

|---|

comment:93 by , 14 years ago

I have the same need, at the moment I'm trying to use this postgresql specific "hack" https://github.com/tuttle/django-schemata

comment:94 by , 14 years ago

| Cc: | added |

|---|

comment:95 by , 14 years ago

The best solution I found was to configure postgresql to set a default "search_path" for the user I connect as from Django:

ALTER USER "djangouser" SET search_path to schema1, schema2, public;

All the django tables get created in "schema1" and there is zero additional configuration required on the Django side.

comment:96 by , 14 years ago

| Cc: | added |

|---|

follow-up: 98 comment:97 by , 14 years ago

| Cc: | added |

|---|---|

| Easy pickings: | unset |

I think I am going to be the next one to pick this up. I hope I will have time to work on this later this spring. But for now I have two questions:

When using MySQL or Oracle it seems you must create the schemas in testing prefixed with test_. This will give serious problems for Raw SQL users, and I guess there will be a lot of raw SQL users because multi-schema support is most important for legacy databases. Any good ideas? You could always write your SQL as:

cursor.execute("select * from %s where id = %%s" % mymodel._meta.qualified_name, (params,))

that looks ugly, but I can't figure anything better. Better API welcome. Although I don't care _that_ much, I use PostgreSQL... :)

The second question is if there is any core developer willing to support my work? I hope there is somebody interested, as otherwise it can be pretty hard to actually get the work finished and into core.

As said, I won't start working on this before I have time to do so. This means at least a month before I can start polishing the patch, likely a bit more.

comment:98 by , 14 years ago

Replying to akaariai:

I think I am going to be the next one to pick this up. I hope I will have time to work on this later this spring. But for now I have two questions:

When using MySQL or Oracle it seems you must create the schemas in testing prefixed with

test_. This will give serious problems for Raw SQL users, and I guess there will be a lot of raw SQL users because multi-schema support is most important for legacy databases. Any good ideas? You could always write your SQL as:

cursor.execute("select * from %s where id = %%s" % mymodel._meta.qualified_name, (params,))that looks ugly, but I can't figure anything better. Better API welcome. Although I don't care _that_ much, I use PostgreSQL... :)

When using raw SQL, I already assume that it's preferable to use the model introspection APIs to substitute in all table names, field names, etc, rather than hardcoding them, so I don't have any problem with that requirement.

I suppose it's a bit of an issue that ._meta is undocumented and technically private API, but it's already pretty well recognized that large swaths of it are de facto public (and I think there may even be a ticket for cleaning it up and documenting parts of it).

comment:99 by , 14 years ago

| Cc: | added |

|---|

comment:100 by , 14 years ago

| Cc: | added |

|---|

comment:101 by , 14 years ago

| Needs documentation: | set |

|---|---|

| Owner: | set to |

Initial status report for the work on this ticket. Using Meta: db_schema is somewhat working on PostgreSQL. Other backends will not work at all currently (even without schemas). Lacks most tests and many features, and of course lacks all polish.

Implementing the feature fully is much larger amount of work than one would expect. Luckily it seems there aren't any really hard problems left.

I started from the latest patch in this ticket. There was one big mistake in that patch: there is no way to have a ._meta.qualified_name which would be directly usable in queries. Different backends need different quoting etc for the qualified_name, thus it can only be resolved at the compiler.py stage.

To fix this, I change model._meta.qualified_name to a tuple (db_schema, db_table), where db_schema can be None. As a result of this, the patch implementation has a pretty big change to alias usage in queries: where currently first instance of each table will use the plain table name (ie. no alias at all), the schema support branch changes this in a way where even the first occurrence of the table will get an alias. There are two reasons:

- The implementation would get somewhat nasty without this: for example any column could either have alias, or qualified_name as the prefix, and one would need to check this each time when quoting the name. Same for where clause entries, order_by entries, aggregates and so on.

- I wonder if users really want to see queries like:

select "some_schema"."some_table"."col1", "some_schema"."some_table"."col2", "some_schema"."some_table"."col3" from "some_schema"."some_table" where "some_schema"."some_table"."col1" = 1

instead of:

select T1."col1", T1."col2", T1."col3" from "some_schema"."some_table" T1 where T1."col1" = 1

In addition I believe the always-alias implementation will make SQL generation faster and cleaner. The downside? .extra() users will have a fun time rewriting every: "some_table"."col1" to T1."col1".

So, the question is if the always-alias implementation has any chance of getting in. I really think it will result in cleaner queries in SQL, and it will simplify query generation. The generated query string should be an implementation detail, and .extra users will need to adapt to the changing queries.

If the above is seen as backwards incompatible, I will have to think of a way to get the old behavior back. This will likely come with a performance penalty, which is unfortunate as generating the column list is even currently somewhat slow for wide tables.

The work can be found from https://github.com/akaariai/django/tree/schemas. The bulk of the work resides in database introspection, and I haven't even touched oracle or mysql yet...

Sidenote: sql/query.py rev_join_map is now removed. There wasn't enough usages for it to keep it around. It was used in .combine() where it is easily replaced by using alias_map directly, and in .relabel_aliases, where it gave a really small performance boost.

comment:102 by , 14 years ago

Status report: more work uploaded to github. Now there is no always-alias or rev_join_map removal. The test suite now passes on pgsql and sqlite. MySQL is the next target. There is some major items still left (apart of supporting mysql and oracle):

- Each database alias should have an user-settable default SCHEMA. In addition SCHEMA_PREFIX is needed for Oracle and MySQL testing.

- on SQLite the dbtable should be prefixed with the schema (as in '%s_%s'). This is to support running tests using schemas on sqlite.

- The above two items will cause some refactoring to introspection, creation and query running.

- The creation and introspection APIs need to be reviewed and probably refactored.

- Support for renaming schemas for testing (dbschema 'dbschema1' will become 'test_default_dbschema1' and so on, that is 'test_%s_%s' % (connection_alias, dbschema)). Needed for backends where Django's database NAME and schemas live in the same namespace (MySQL, Oracle).

- Documentation

- And finally some more tests.

So, work is advancing but this feature is _much_ bigger than I anticipated. Again: work can be found from https://github.com/akaariai/django/tree/schemas. The feature should be already fully usable on PostgreSQL (using settings.DEFAULT_SCHEMA and model.Meta db_schema).

I think using always-alias would be a good idea, but it is backwards incompatible. Maybe if .extra() could be deprecated in some way, then adding always-alias to queries would work. I will create another ticket for query.rev_join_map removal.

comment:103 by , 14 years ago

| Cc: | added |

|---|

comment:104 by , 14 years ago

Unfortunately a new and somewhat annoying problem found. Initial sql (the raw sql in app/sql/*.sql files) is going to be problematic. The problem is that because project settings can change the qualified table names (by DEFAULT_SCHEMA or 'SCHEMA' in connection settings) you can no longer know what the table name is in the raw SQL file. In addition, on MySQL and Oracle the qualified table name will need to be changed in test runs to avoid namespace collisions, making it impossible to write a raw SQL file even if you know the settings' default schema.

When using raw SQL in Python you now need to use connection.qname(model), which will return a correctly quoted qualified table name for the model's table. However, in the initial SQL files you can not do that. I think I will not fix this for the initial implementation. Current use of the raw SQL files will work, but when using schema support you will have problems especially on Oracle and MySQL. This could perhaps be fixed later on by having for example ##schemaname## placeholder in the raw SQL files which is then replaced by the in-use schema name. But, as said, this is a problem for later times.

Current status of the feature: SQLite now has faked schema support, the schema name is just appended to the table's name. Both settings.DEFAULT_SCHEMA and per-alias SCHEMA configurations now work in addition to the Meta: db_schema. Introspection and database creation work fully, and full test suite pass on both SQLite and PostgreSQL even when global settings defines a custom schema (except for the above mentioned initial raw SQL issue). Making the tests pass involved a lot of raw SQL rewriting from "select * from sometable" to "select * from %s" % connection.qname(SomeModel). MySQL and Oracle are still totally broken.

EDIT: Good news everybody! I just realized that initial SQL can't be used in testing, so initial SQL in testing isn't a problem :)

comment:105 by , 14 years ago

But at least PostgreSQL allows you to set schema search path. Wouldn't this help with raw SQL?

comment:106 by , 14 years ago

mitar: Yes it would. However I don't think it is Django's business to change seatch_path, at least if not explicitly asked. In addition, you will have no problems on PostgreSQL except if you plan to install the same application in multiple schemas which could happen to 3rd party apps for example. Testing will not be a problem on PostgreSQL except in the previous case.

It isn't hard to write proper raw SQL which works in testing, you need to do cursor.execute("SELECT * FROM %s WHERE id = %%s" % connection.qname(ModelClass), (id_val,)). You do not need to do this connection.qname transformation if you do not plan to support schemas. Everything will work just as before in that case.

It would actually make sense to also have a connection.cols(ModelClass) -> named_tuple, as this would help in writing proper SQL with qualified names (DRY also).

Current situation: MySQL, PostgreSQL and SQLite all pass full test suite when running under custom schema. The django/db/backends changes will need to be reviewed and there is some need for a rewrite. It is bit of a mess at the moment. Supporting Oracle should also be pretty straightforward, as MySQL and Oracle have similar schema support from Django's perspective.

The docs should not advertise this feature too much. For most applications you really don't want to use schemas. On SQLite the schema support is just a compatibility hack, on MySQL you definitely do not want to run with global custom schema (use different database instead). However, on PostgreSQL and Oracle this could be really valuable especially for hand-written/legacy schemas.

comment:107 by , 14 years ago

| Cc: | added |

|---|

comment:108 by , 14 years ago

| Cc: | removed |

|---|

follow-up: 110 comment:109 by , 14 years ago

| Triage Stage: | Accepted → Design decision needed |

|---|

I now have something that passes all the likely-to-break tests on all four core backends. Running the full test suite on all backends takes a lot of time, so I haven't done that. Code at github.

The patch is rather large (+1559, -443 lines), and most of that is actual code changes. While lines of code isn't that important, this patch introduces a source of confusion into introspection. Previously, a Model could be identified uniquely by its table name. Unfortunately, there are multiple formats for qualified names:

- (None, table) from models: This format says that the table should reside in database default schema. This can be settings.DEFAULT_SCHEMA or the search_path default schema. The problem is, on PostgreSQL we really do not know what that search path default schema is.

- (someschema, table) from models

- (someschame, table) from database

When we have model with qualified name (foo, tbl) in SQLite it is turned into (None, foo_tbl) in the database (no real schema support on SQLite). On Oracle & MySQL in testing (foo, tbl) -> (default_foo, tbl) for alias default. This is because the production database's schemas and testing schemas live in the same namespace.

The end result of this is that it is really error-prone to do introspection and inspection. If you are asked if table foo, tbl exists you must know if the foo, tbl is from the model, or from database. If it is from a model, you must do conversion to database format first. The problem is, in the patch it isn't at all clear when you have database format, and when you have Model's format. It works, but mostly by luck. My current idea for fixing this is to introduce two namedtuples, DBQname and ModelQName so that it is possible to assert the methods get data in the format they expect.

But the most important question at the moment is how much we want schema support? I am sure this feature would cause headaches for schema migrations for example. Obviously I would like the schema support in core, but I think a final design decision is needed, as the feature as implemented is much larger and complex than one would have thought beforehand. So, I will mark this ticket as design decision needed.

One option would be to have schema support, but not for introspection. The problem with this approach is that you can't use the Django's default test runner, as you will not be able to flush & create the database without any introspection support. In effect, setting model's meta.db_schema to some value would imply managed=False.

Another option is to support this feature only when full schema support is available. That would mean PostgreSQL always, and MySQL and Oracle when you run the tests on different database instance, so that production and testing schemas do not collide. In effect, you would need TEST_PORT and/or TEST_HOST in DATABASES to be set to run the tests on MySQL or Oracle (only when using schema support, of course).

comment:110 by , 14 years ago

Replying to akaariai:

This can be settings.DEFAULT_SCHEMA or the search_path default schema.

Just a quick comment. Shouldn't this be a setting inside the DATABASES setting for each entry?

comment:111 by , 14 years ago

Correct. The rule is: use Meta.db_schema or connection's settings_dict['SCHEMA'] or settings.DEFAULT_SCHEMA.

by , 14 years ago

| Attachment: | 6148_django1.5.diff added |

|---|

comment:112 by , 14 years ago

The added patch (6148_django1.5.diff) should make it easier to test this feature. Short usage instructions:

- set model specific schema by using Meta.db_schema option (works similarly to db_table, also present for

ManyToManyFields) - set database specific schema by settings.py DATABASES setting 'SCHEMA'.

- when running raw SQL you will need to use connection.qname to get the schema qualified table name of a model (the tests contain plenty of examples, search for qname in regressiontests/queries/tests.py).

- note that on MySQL and Oracle the schemas is automatically prefixed by the database alias to avoid collisions between production and testing. This is the reason for the above qname usage - you do not know the table's qualified name, and it will be different in testing and production.

- do not test the patch on a database instance containing production data. It is possible that running tests will drop your production schemas instead of testing schemas. This should not happen, but the feature is currently alpha quality...

Otherwise basic usage should work including syncdb. There might be oddities in table creation SQL output from manage.py sql* commands, and inspectdb isn't too well schema aware currently.

comment:113 by , 14 years ago

The problem about running raw SQL will be introduced by the app-loading refactor anyways (#3591). The refactor introduces configurable table prefix per app which means a model will no longer know its table name. The problem isn't of course nearly as bad in the app-refactor, as the prefix is fully controllable by the project author. However, for reusable apps the problem is very similar to what is introduced by db-schemas.

I will need to update the patch, as it doesn't apply to head currently.

I don't believe there to be that much actual coding work left anymore. However, I am not sure if we have an agreement if we want this into core. A nice feature, but it also complicates things. For example 3rd party apps dealing directly with raw SQL will need updates (South comes to mind here).

comment:114 by , 14 years ago

| Needs tests: | unset |

|---|

I updated the patch to current master, it can be found from here.

The patch is currently at ~1700 lines added, 500 removed. So, it is a pretty big patch.

It does work on my select test list on every core db. I have tested it using the following tests: introspection transactions inspectdb fixtures queries extra_regress prefetch_related test_runner aggregation_regress dbschemas

GIS isn't yet dealt with.

Everybody who wishes this feature into core should test the patch. No need for code reviews, just test it for your use case. Testing should be simple: download the code from the branch (by using git directly, virtualenv git magic or using this link: https://github.com/akaariai/django/tarball/db_schemas). Preferably run your existing project's tests against the downloaded Django version. You can use DB schemas by either using database alias setting 'SCHEMA': 'my_testing_schema', or by using Model meta attribute db_schema = 'someschema'.

Please, do test this. I will assume there is no interest in this feature if nobody reports any test results.

There is still the question if we want to add the complexity of supporting DB schemas. The complexity comes from three sources:

- Introspection, creation: multischema awareness makes things more complicated here. This will also make life harder for 3rd party apps, South and external database backends come to mind here.

- Different backends have different requirements: PostgreSQL is easy here - it has fully namespaced schemas. Oracle and MySQL do not have these: Django's databases live in the same namespace as schemas. Thus, in testing the schemas must be 'test_' prefixed to avoid collisions. SQLite just uses table name prefixing. As an example of other complexities encountered: Oracle requires creating additional connections to create foreign keys properly. If somebody knows of a way to overcome this, I am all ears.

- Model's name isn't guaranteed to stay the same in testing and in production. This means problems for raw-SQL users (use connection.qname(MyModel) to get a fully qualified name). This also causes some complexities in the patch.

I am going to rebase the branch from time to time. That is, do not base your work on the branch.

comment:115 by , 14 years ago

I had some IRC-discussions with Andrew Godwin today. It was agreed that the feature should not aim to support testing on single database instance if the database doesn't support namespaced schemas (only PostgreSQL has them from Django's perspective). This should simplify the implementation somewhat, as there is no need to test-prefix the schema name.

I will try to rewrite the patch without support for test-prefixing the schemas (and also drop support for SQLite's prefix-the-table-name hack totally).

comment:116 by , 14 years ago

Catching up now on this ticket, I'll try to do some testing on Oracle soon.

I want to suggest that we also consider foreign-schema creation to be out of scope for databases like Oracle, where schemas and users are the same things. The current code that attempts to do all this through a single configured Oracle connection is a bit naive; it assumes that all users have the same password, and it appears to also assume that the configured Django user has the CREATE ANY TABLE privilege, which would be an unusual production setup.

Instead, a Django user who wants to manage multiple Oracle schemas with Django should set up a separate database connection for each schema. This way we're not trying to create or alter objects belonging to other users. For the REFERENCES issue, there are two cases -- either the referenced schema is not also managed by Django, in which case the user is responsible for ensuring the necessary grants, or both schemas are managed by Django, in which case we might better be able to automate the grant with the using() construct.

comment:117 by , 14 years ago

I agree on simplifying the Oracle hacks in the patch. The current patch tries to abstract too much of the differences between the databases away. Lets rip the current patch to absolute minimum, and then continue from there.

The "multiple schemas on Oracle -> multiple defined connections" needs some more thought on how to map the tables to the connections, and how to see that some aliases are just schemas for another alias, so that joins are allowed, and one can save model in schema "other" using the default connection. I hope this can be made to work...

comment:118 by , 14 years ago

@akaariai, I have interest in testing your patch with my code. I have a SaaS application and would like each tenant to have a particular schema. To set the schema based on the logged in user, all I have to do is to dynamically set the db.schema setting? Thanks in advance.

comment:119 by , 14 years ago

| Cc: | added |

|---|

comment:120 by , 14 years ago

I guess you will need to do something like this to use custom schema dynamically:

connection.settings_dict = connection.settings_dict.copy() # avoid changing anything global... old_schema = connection.settings_dict['SCHEMA'] connection.settings_dict['SCHEMA'] = wanted_schema

And then of course in "finally:" block reset the 'SCHEMA' back to its original value.

comment:121 by , 14 years ago

@akaariai Thanks for the patch! I have a project where I have multiple schemas and some of the tables have foreign keys between them. My environment consists of PostgreSQL and my settings contain multiple entries in DATABASES with their own schema defined (as suppose to hard-coded schemas in the model Meta classes). I ran the ./manage.py sqlall command and noticed a couple things:

- there are redundant

CREATE SCHEMAstatements before eachCREATE TABLE - foreign keys to tables from other schemas do not output the correct schema

comment:122 by , 14 years ago

@akaariai just an FYI I forked your repo and am attempting to fix these issues myself. The latter issue I mention above seems to be more deeply rooted in that the sql* commands seem to not take in account database routers (since it is not technically a syncdb command). This is further confusing since the sql* commands take a --database parameter. Your concern above regarding third-party apps could be resolved by users defining a router. The db_for_* methods are suitable since multiple database entries can be defined for simply defining separate schemas.

I am going to give integrating the database routers a whack.

comment:123 by , 14 years ago

I am sure everyone has mused over this many times, but this is a quick outline I came up with, derived from my use case including the primary goals, and a few scenarios/setups with a list of possible solutions (best first).

- Goals

- ability to use a non-default schema given the backend

- transparently route models to their respective schemas given a database

- handle schema creation (?, on the fence about this)

- Scenarios

- single database, one schema

- use the database backend default schema (out-of-box)

- override the

DATABASES['default']['SCHEMA'] - override

DEFAULT_SCHEMAin settings (this is kind of silly to have since it's so simple to define the schema in the database settings above) - define each model's

db_schemameta option (in my mind, this is only really useful for models that are not intended to be reusable outside a company/guidlines/etc.)

- single database, one schema

- single database, multiple schemas

- define a router that implements

schema_for_dbwhich returns a valid schema name orNoneif no preference- takes a

model,db, and**hints - in case there is some funky setup where a separate set of database settings is not enough, this provides a generic solution which allows re-use of models/app regardless of the database structure, nothing hard-coded on the models themselves

- takes a

- set each model's

db_schemameta option for ones not in the default schema (garbage)

- define a router that implements

- multiple databases

- non-issue since schemas do not span across databases

comment:124 by , 14 years ago

I have done no work on the manage.py sql* commands at all. I am not surprised they don't work. But, the foreign key issue seems worrisome. Is this from sql* or from the created database? I thought I had nailed the issues with foreign keys but I am not that surprised to find out this isn't true.

There are two ways the schemas can collide: between testing and production databases, and between two different database aliases both using the same schema. So, the "key" for schema must be "in_testing, alias, schema_name".

I am thinking of something like this as the DATABASES format. Assume there are two databases, default and other, and two schemas, schema1 and schema2:

DATABASES = {

'default':{

...

},

'other': {

...

},

'default:schema1': {

# Used in production for default's schema1 schema.

},

'default:schema2': {

...

},

'other:schema1': {

...

},

'other:schema2: {

...

},

'test:default:schema1' {

...

},

...

}

Unfortunately this gets large, fast. But, maybe that is how it must be.

For PostgreSQL the schema aliases would not be needed.

The routers approach is interesting because one could do some funny things with it: the typical example is querying a different schema for different clients. Worth investigating more. I know of an immediate use for this, so I am interested...

It is noteworthy that one must be able to do full-db syncs if syncdb support is added. There can be circular cross-schema foreign keys, and these can not be created before both schemas are created. This is impossible to do in single-schema syncs.

comment:125 by , 14 years ago

You can take a look at the couple commits (https://github.com/cbmi/django/commits/db_schemas) I've added. The latest one makes use of connection.qname as the primary means of determining the qualified name. This uses a new router method schema_for_db. I have not updated any tests yet, but for my project locally with multiple schemas all the sql* commands are working nicely.

comment:126 by , 14 years ago

| Cc: | removed |

|---|

comment:127 by , 14 years ago

I didn't have time to go through the changes in full, but on the surface of it they look good.

We will need to find some solution using DATABASES as the definition point for the connections for different schemas on databases where these are needed. I am not at all sure my idea above is the correct approach, it was just one concrete idea of how to define the schemas.

comment:128 by , 14 years ago

I agree, the one issue with only using a router is the lack of transparency of which schemas are in use or are available. I had thought about defining multiple database entries for each schema also, but it got a bit unwieldy and it kind of conceptually conflicts with the fact that cross-schema references are possible (at least in postgres).

Thanks for the quick review. I will am going to get the tests up-to-date at the very least and then continue musing over where/how to explicitly define the schemas in the settings.

comment:129 by , 14 years ago

Another idea for the DATABASES format:

# for MySQL

'default': {

...

'SCHEMAS': {

'myschema1': {'NAME': 'myschema1'},

'myschema2': {'NAME': 'myschema2'},

}

}

# for Oracle

'default': {

...

'SCHEMAS': {

'myschema1': {'USER': 'foo', 'PASSWORD': 'bar'},

'myschema2': {'USER': 'foo2', 'PASSWORD': 'bar'},

}

}

The idea is that one must define just the needed variables for each schema. On Postgres you don't need any additional info -> no need to define schemas. On Oracle, you need user and password, and on MySQL you need at least the database name (and possibly user and password).

One could define similarly TESTING_SCHEMAS.

There is still room for improvement: by default the NAME on MySQL should be the same as the schema's name, and the USER on Oracle likewise. The password on Oracle could be the same as the normal user's password. Thus often one could get away with just defining the TESTING_SCHEMAS. One would need to define the full SCHEMAS only when there are collisions, or some other need to use non-default values - and it is easy enough to spot the collisions, at least in testing.

The above SCHEMAS would be turned to full database aliases when needed (that is, in syncdb situations). They should never be visible to users.

The backend.convert_schema() would work something like this:

def convert_schema(self, schemaname):

return self.settings_dict['SCHEMAS']['USER'] # for Oracle

In testing one would overwrite settings_dict['SCHEMAS'] with settings_dict['TEST_SCHEMAS'].

comment:130 by , 14 years ago

| Cc: | removed |

|---|

comment:131 by , 14 years ago

Here is the latest patch with all the tests up-to-date and rebased with Django HEAD: https://github.com/cbmi/django/commit/dd13fc275e78b4e34fea65467f001a45dd4cb05e I have not implemented any of the SCHEMAS settings above.

comment:132 by , 14 years ago

| Cc: | added |

|---|

comment:133 by , 14 years ago

One remaining issue with how this is implemented is that qualified names that are sometimes referenced prior to a database being selected or a connection is available. All the qualified names being accessed from model._meta may not actually be fully-qualified since a database has not been selected and thus no schema (this of course assumes the db_schema options attribute has not been defined).

One possible solution is using the db_for_read or db_for_writerouter methods to determine with database within db/models/sql/query.py depending on the compiler being used, but that feels somewhat hideous. I thought a more appropriate place would have been db/models/sql/compiler.py, but that is too late since the qualified names are already referenced (no longer have the model to pass into the router).

Another solution (which seems to be more flexible) would be to store a reference of the model in the QName object. That way depending on the context of QName's use, the model will be available. To not break existing code, QName may need to be a class that behaves like a namedtuple, but has the model as an extra attribute to be used if needed. I will give this a go.

comment:134 by , 14 years ago

@akaariai are you going to DjangoCon in DC this year? I would like to finish this patch up by then.

comment:135 by , 14 years ago

I won't likely make it to DjangoCon. But, I will try to keep track of work done in this ticket.

As for now, I think the most important thing is to get the way databases are set up (both the settings side, and how production/test databases are handled) correct.

I am not 100% sure if I understand the routers idea... Still, having the model's opts at hand in the compiler would be nice, there are other uses for that, too.

comment:136 by , 14 years ago

| Cc: | added |

|---|

comment:137 by , 13 years ago

I think the correct way to define the schemas is to have two new database settings, SCHEMA_MAP and TEST_SCHEMA_MAP. The first one is required only Oracle, and it will contain the needed user information for production. You might also need this on MySQL if you have more than one alias on the same DB instance for some reason. The second one is required for Oracle and MySQL, and it contains the information about which schema to use in testing.

So, assuming that you have schemas 'schema1' and 'schema2', then the settings would look like:

DATABASES = {

'default': {

'engine': 'MySQL',

...,

'TEST_SCHEMA_MAP': {'schema1': 'test_default_schema1', 'schema2': 'test_default_schema2'}

},

'other': {

'engine': 'Oracle',

...,

'SCHEMA_MAP': {'schema1': {'user': 'schema1', 'password': 'top_secret'},

'schema2': {'user': 'schema2', 'password': 'top_secret'}},

'TEST_SCHEMA_MAP': {'schema1': {'user': 'test_schema1', 'password': 'top_secret'},...}

# Or, to mirror the production schemas, just do:

'TEST_SCHEMA_MAP': 'SCHEMA_MAP',

}

}

How the contents of SCHEMA_MAP and TEST_SCHEMA_MAP are interpreted is DB specific.

comment:138 by , 13 years ago

I like SCHEMAS and TEST_SCHEMAS better for names, but this configuration looks good. To answer your question from your previous comment, the new router method schema_for_db is simply to support routing operations to a particular schema (just like you can route operations to a database). For example all the auth_* and registration_* models may get routed to the auth schema, while social media related items might get routed to the social schema. More interesting scenarios are dealing with multi-tenant databases where each tenant has it's own schema rather than having to manage potentially hundreds or thousands of databases. Or if you are sharding your data to various logical shards across different databases you can route your data based on some modulus.

I am still a bit hesitant with being able to define db_schema on the Model.Meta class. I think it will be important to note in the docs that released apps should not define db_schema on any of the models to ensure it is not imposing any database constraints on the developer.

follow-up: 143 comment:139 by , 13 years ago

I just noticed your comment # Or, to mirror the production schemas, just do:.. that should be the default, so it does not need to be defined explicitly.

follow-up: 141 comment:140 by , 13 years ago

The tenant use-case is the one I am most interested in. And, for that reason I do think routers could be pretty good. To support that we should be able to sync the same model to multiple schemas. Actually, somehow we should be able to route a system of models to single schema (that is, you have foreign keys inside each schema created correctly).

It should be exceptionally clear in the docs that one should not confuse routing to different schemas with routing to different databases. The schemas are under control of one db instance, and you can have foreign keys across schemas, and you can use multiple schemas in a single query. This is not at all true when using different database aliases.

Maybe we could aim to have the routers implemented, but assume a single model is installed in only one schema. If a user wants to have it in multiple schemas, we do not try to support this at start. I believe having full support for same model in multiple schemas will be hard to implement.

comment:141 by , 13 years ago

Problem appears when you add 'south' to installed apps.

Patched Django 1.4.1

Unfortunately I cannot add traceback because TRAC treats me as spammer

comment:142 by , 13 years ago

| Cc: | added |

|---|

comment:143 by , 13 years ago

Replying to bruth:

I just noticed your comment

# Or, to mirror the production schemas, just do:.. that should be the default, so it does not need to be defined explicitly.

I'm going to disagree and advocate that this option not even be explicitly supported. It's bad enough already that the Oracle backend runs tests on the production-configured database (just in a different tablespace and schema). If you don't use a separate settings.py file with separate connection information for testing, and you mirror the production schemas, then your tests are actually going to run in your production schemas.

The other thing I want to comment on is that SCHEMA_MAP should be completely optional, unless you're running syncdb and have managed tables in those schemas. For day-to-day operation, Django should only be connecting as the Django user and does not need full authentication information for other schemas.

comment:144 by , 13 years ago

| Cc: | added |

|---|

comment:145 by , 13 years ago

| Cc: | added |

|---|

comment:146 by , 13 years ago

| Triage Stage: | Design decision needed → Accepted |

|---|

This is clearly accepted, the DDN was about how to implement this feature.

comment:147 by , 13 years ago

| Cc: | added |

|---|

comment:148 by , 13 years ago

| Cc: | added |

|---|

comment:149 by , 13 years ago

| Cc: | added |

|---|

comment:150 by , 12 years ago

| Cc: | added |

|---|

comment:151 by , 12 years ago

I think I now know how to implement the multitenant use case.

Lets introduce a TableRef class. By default it would look something like this:

class TableRef(object):

def __init__(self, schema, table, alias):

self.schema, self.table, self.alias = schema, table, alias

def as_sql(self, qn, connection):

schema_ref = (qn(self.schema) + '.') if self.schema else ''

table_ref = '%s%s' % (schema_ref, qn(self.table))

if self.alias:

return '%s %s' % (table_ref, self.alias)

else:

return table_ref

If you just set db_table and schema in model.Meta, then you get the above class instantiated and used in queries. But, you can also do:

class DynamicSchemaTableRef(TableRef):

@property

def schema(self):

# Return schema dynamically, based on thread-locals or a router

# or whatever you want. The schema would be effective both in

# queries and in migrations. So, you could install the same models

# to different schemas by using migrations.

class SomeModel(models.Model):

...

class Meta:

db_table = DynamicSchemaTableRef("$placeholder$", 'some_model', None)

Now, when you use SomeModel then references are autogenerated to point to correct schema by DynamicSchemaTableRef.

There are a couple of other use cases. For example:

class SubqueryRef(object):

def __init__(self, query, alias):

self.schema = None

self.query = query

self.alias = alias

def as_sql(self, qn, connection):

return '(%s) %s' % (self.query, self.alias)

Now, add a model:

class MyViewModel(models.Model)

col1 = models.IntegerField()

col2 = models.CharField()

class Meta:

managed = False

table = SubqueryRef("select t1.somecol as col1, t2.othercol as col2 "

" from base_table1 t1 inner join base_table2 t2 on t1.id = t2.t1_id "

" where some_conditions", "myviewmodel")

Now, MyViewModel.objects.all() will generate query:

select myviewmodel.col1, myviewmodel.col2 from (select t1.somecol as col1, t2.othercol as col2 from base_table1 t1 inner join base_table2 t2 on t1.id = t2.t1_id where some_conditions) myviewmodel

This allows one to do "views" in Django.

Similarly you could make AuditTableRef that can be used to query arbitrary points in time from audit table and so on. Django doesn't need to encourage these usage patterns, but they are available if you want to.

Two main problems with this approach:

- Somewhat extensive changes needed to sql.*, introspection and migrations. But, for schema support extensive changes are needed anyways.

- There could be some performance problems as this proposal adds again more class instances to simple queries (custom lookups already did that, lets see if anybody complains). Still, I think the problem should be manageable. We could also cache SQL in the table references, so that when no aliasing is needed (the common case) we get SQL generated in a very fast way.

comment:152 by , 12 years ago

| Cc: | added |

|---|

comment:153 by , 11 years ago

| Cc: | added |

|---|

comment:154 by , 11 years ago

| Cc: | added |

|---|

follow-ups: 156 159 comment:155 by , 11 years ago

I had an idea about this some time ago. Instead of building support for

class MyModel:

...

class Meta:

db_schema = 'foobar'

db_table = 'sometable'

we should implement something like this

class MyModel:

...

class Meta:

db_table = SchemaQualifiedTable('sometable', schema='foobar')

The idea is that the model's db_table is actually a class that knows how to produce SQL for itself. This could be used for schema qualified tables, and also for producing SQL view like behavior. That is, you could push in a custom View("select * from foobar") object. Then for QuerySet

MyModel.objects.all()

django would produce SQL

SELECT * FROM (select * from foobar) T1

the SQL inside the parentheses is produced by the View() class.

Even if users can access schema qualified tables by implementing a SchemaQualifiedTable class, we still need a bit more knowledge of the schema inside Django. The SchemaQualifiedTable class would work for existing databases, but the schema attribute of the table class must be supported by Django in some places (for example introspecting if the table already exists, and also automatic creation of the schema in migrations).

It would also be possible to use a dynamic schema if wanted (just implement a custom Table class that changes the schema dynamically), but Django wouldn't officially support dynamic schemas. The simple reason is that nobody is ever going to implement delete cascades for dynamic schemas. Also, multi-schema migrations seem like too hard of a problem to solve.

follow-up: 157 comment:156 by , 11 years ago

Replying to akaariai:

I had an idea about this some time ago. Instead of building support for [...]

This is intriguing but makes sense... Would you by chance have already drafted some code for this idea?

Also, what do you think of the approach in: https://github.com/bernardopires/django-tenant-schemas

Cordially

--

Philippe

comment:157 by , 11 years ago

Replying to pombredanne:

Also, what do you think of the approach in: https://github.com/bernardopires/django-tenant-schemas

Setting search path in PostgreSQL is a very common technique in case of multi-tenant applications, a lot of Rails people do it also, but that wouldn't work either in SQLite, nor in MySQL which Django have to support. Also, that project is not capable of handling cross-schema references, which is the most important feature of a true multi-tenant application IMO.

comment:158 by , 11 years ago

FWIW, I compiled a list of various implementations of multitenancy in Django, several of which are schema-based:

https://github.com/pombredanne/django-simple-multitenant/blob/master/README.rst

comment:159 by , 11 years ago

Replying to akaariai:

It would also be possible to use a dynamic schema if wanted (just implement a custom Table class that changes the schema dynamically), but Django wouldn't officially support dynamic schemas. The simple reason is that nobody is ever going to implement delete cascades for dynamic schemas. Also, multi-schema migrations seem like too hard of a problem to solve.

Multi-schema migrations are indeed a hard problem to solve. I think I have a fairly workable solution in place now (Postgres only), at https://django-boardinghouse.readthedocs.org/

My approach is a multi-tenancy approach (with some shared tables: usually the user table, the tenant table, and perhaps others), and requires that for all non-shared models, every migration operation that appears to be running on one of those models should be applied to each tenant schema in turn.

In practice, this makes migrations quite slow, even for small numbers of schemata. However, it does allow cross schema relations.

It uses setting the search path rather than explicitly including the schema name in the model.

comment:160 by , 11 years ago

| Cc: | added |

|---|

comment:161 by , 11 years ago

| Cc: | added |

|---|

comment:162 by , 10 years ago

| Cc: | added |

|---|

comment:163 by , 10 years ago

| Cc: | removed |

|---|

comment:164 by , 10 years ago

| Cc: | removed |

|---|

comment:165 by , 10 years ago

If we ever want to proceed on this ticket, I think we need to split this to two parts:

- Make core ORM usable with multi-schema setups

- Allow migrations for multi-schema setups